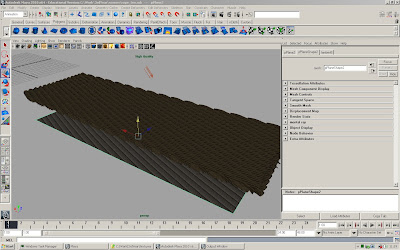

Okay... I started with most fun task- A fire wall for Charlie's project. I haven't really done fire before. It does look like a lot of fun though. I found David Schoneveld's blog quite a while back and I really enjoy watching his tutorials. He's pretty much "the Daddy" when it comes to MayaFluids and I learnt quite a lot. He also talked about SoUP an opensource Plugin bundle written by Peter Shipkov http://petershipkov.com/development/SOuP/SOuP.htm, which is kind of based on the node based workflow from Houdini, but for Maya. Very cool stuff for any kind of Maya related problems.

The thing Schoneveld talks about is UpresFluid in SoUP. Like I said most of the nodes are based on Houdini nodes and so is UperesFluid.

The problem with MayaFluids is that the fluid container resolution changes the simulation ( in some cases quite drastically), so when you start off with a low resolution to get the motion right in realtime and then crank the resolution up for the shading, the low resolution motion of the fluid can be significantly different to the high resolution one. In Houdini you start on a low res container then upres it, which makes an exact copy of the low res container but with the option to multiply the resolution. SoUP's upres fluid does exactly the same (Awesome!!!).

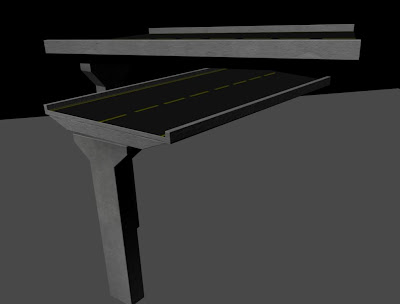

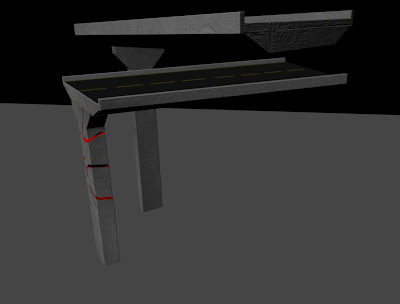

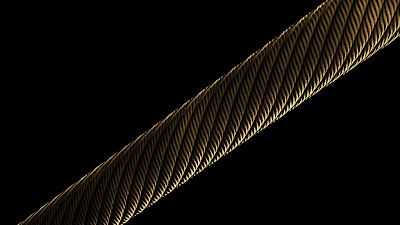

Now to the practice... Charly and Luke are going to make a life action film with quite a bit of Vfx in it. One of the effects is gonna be a wall on fire, best example to test upres fluid.

I know it's not really good, but it shows how much more details you can get out of the original MayaFluid sim. Another really handy option is the wavelet turbulence. I'm not quite sure how it works but I know by changing some of the setting it gets rid of the puffy look of the flames.

I've definitely got quite a bit more work to do.

P-Dawg is out!

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)